Identifying structure of student essays

overview

Students must be able to read scientific texts with deep understanding and create coherent explanations that connect causes to events. Can we use Natural Language Processing to evaluate whether they're doing that based on essays they write?

research questions

- Can we automatically identify important concepts in students' scientific explanations?

- Can we identify causal relations in their explanations?

- Can we use these to identify an essay's causal structure?

- How many training examples are necessary?

- Can we automatically assemble training materials for a new topic?

people

- Simon Hughes, PhD defended in 2019.

- Clayton Cohn, MS Thesis defended in 2020.

- Keith Cochran, PhD student, in progress.

- Peter Hastings, squadron leader.

- Noriko Tomuro, associate.

- M. Anne Britt, NIU, collaborator.

progress

- Simon Hughes's dissertation demonstrated \(F_1\) scores averaging 0.84 for RQ 1 with bi-directional RNN.

- Simon demonstrated \(F_1\) scores between .73 and .79 using a bi-directional RNN, and a novel shift-reduce parser.

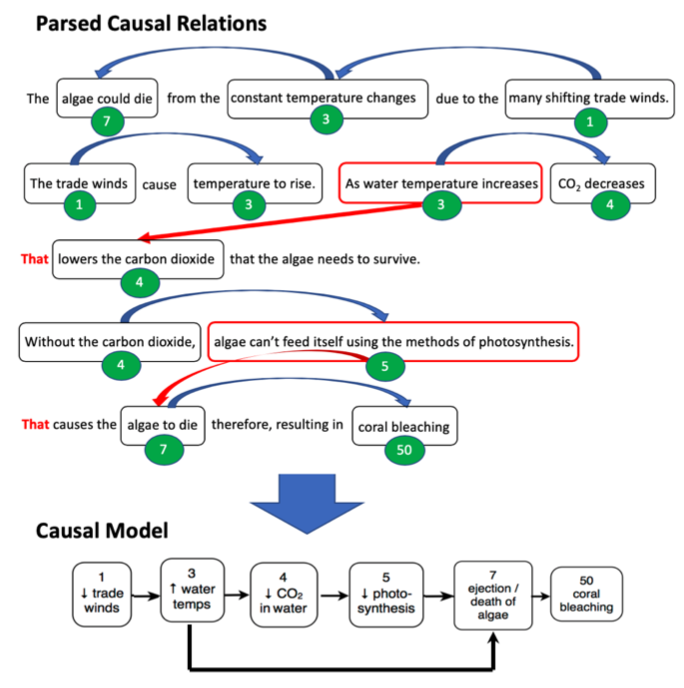

- Simon created a re-ranking approach that scored between .75 and .83 on identifying the entire essay structure (Fig. 1).

- Hastings, et al. showed that 100 annotated essays produced a signicant portion of the performance that 1000 essays did.

- Working on it.

future work

- Application of deep learning transformers like BERT to tasks above, and expanding to other domains.

- Developing specialized deep learning methods for inferring text structure.

- Exploring ensembling methods.

selected publications (more)

- Hughes, Simon Mark, "Automatic inference of causal reasoning chains from student essays" (2019). College of Computing and Digital Media Dissertations. 19. https://via.library.depaul.edu/cdm_etd/19

- Peter Hastings, M. Anne Britt, Katy Rupp, Kristopher Kopp, and Simon Hughes. Computational analysis of explanatory essay structure. In Keith Millis, Debra Long, Joseph P. Magliano, and Katja Wiemer, editors, Multi-Disciplinary Approaches to Deep Learning, Routledge, New York, 2019.

Jennifer Wiley, Peter Hastings, Dylan Blaum, Allison J Jaeger, Simon Hughes, Patricia Wallace, Thomas D Griffin, and M Anne Britt. Different Approaches to Assessing the Quality of Explanations Following a Multiple-Document Inquiry Activity in Science. International Journal of Artificial Intelligence in Education, pp. 1–33, Springer, 2017.

Figure 1: Generating a causal model from parsed causal relations. Hughes (2019), p. 162.